IFN Tutorial for Natural Language Processing

[1]:

import IdealFlow.Text as ift # import package.module as alias

[2]:

text = """Natural language processing (NLP) is a subfield of linguistics, computer science, and artificial intelligence.

It is concerned with the interactions between computers and human language. It can't be true human language, but it is closed enough, right?

In particular, NLP is used to program computers to process and analyze large amounts of natural language data.

"""

text

[2]:

"Natural language processing (NLP) is a subfield of linguistics, computer science, and artificial intelligence.\nIt is concerned with the interactions between computers and human language. It can't be true human language, but it is closed enough, right?\n\nIn particular, NLP is used to program computers to process and analyze large amounts of natural language data.\n"

[3]:

tp=ift.NLP("my_text")

tp.text=text

X,y=tp.prepareTextInput()

print('X:\n',X)

print('y:\n',y)

print('accuracy = ',tp.fit(X, y),'\n')

lut=tp.lut

print('\nlut=',lut)

tp.save()

print('network:\n',tp.IFNs)

sentences=""

for i in range(15):

tr=tp.generate("my_text")

sentence=tp.detokenize(tr)

sentences=sentences+" "+ sentence

print(sentences,"\n")

search_text = "NLP is used"

print("query result:\n",tp.query(search_text))

print("query result:\n",tp.query("In particular"))

X="In particular, NLP is used to program"

print("predict result",tp.predict_text_category(X))

X:

[['Natural', 'language', 'processing', '(', 'NLP', ')', 'is', 'a', 'subfield', 'of', 'linguistics', ',', 'computer', 'science', ',', 'and', 'artificial', 'intelligence', '.'], ['It', 'is', 'concerned', 'with', 'the', 'interactions', 'between', 'computers', 'and', 'human', 'language', '.'], ['It', "can't", 'be', 'true', 'human', 'language', ',', 'but', 'it', 'is', 'closed', 'enough', ',', 'right', '?'], ['In', 'particular', ',', 'NLP', 'is', 'used', 'to', 'program', 'computers', 'to', 'process', 'and', 'analyze', 'large', 'amounts', 'of', 'natural', 'language', 'data', '.']]

y:

['my_text', 'my_text', 'my_text', 'my_text']

accuracy = 1.0

lut= {'k1': 'Natural', 'az2': 'language', 'ci7': 'processing', 'aw9': '(', 'bd6': 'NLP', 'ca9': ')', 'ce3': 'is', 'ba3': 'a', 'cu9': 'subfield', 'bq9': 'of', 'cf4': 'linguistics', 'ck9': ',', 'r8': 'computer', 'ab8': 'science', 'aih18': 'and', 'p6': 'artificial', 'wr16': 'intelligence', 'av8': '.', 'zc79': 'It', 'x4': 'concerned', 'f': 'with', 'ads99': 'the', 'be7': 'interactions', 'ad0': 'between', 'i': 'computers', 'bx6': 'human', 'aaz28': "can't", 'br0': 'be', 'hwv028': 'true', 's9': 'but', 'g': 'it', 'bz8': 'closed', 'y5': 'enough', 'm3': 'right', 'b': '?', 'ah4': 'In', 'bc5': 'particular', 'glm057': 'used', 'aa7': 'to', 'pl28': 'program', 'agt78': 'process', 'ct8': 'analyze', 'c': 'large', 'oz16': 'amounts', 'bs1': 'natural', 'cp4': 'data'}

network:

{'my_text': my_text}

It is used to process and analyze large amounts of linguistics, right? It can't be true human language data. Natural language data. In particular, but it is used to program computers and artificial intelligence. Natural language. It can't be true human language data. In particular, and artificial intelligence. It can't be true human language. In particular, NLP) is concerned with the interactions between computers and artificial intelligence. Natural language processing (NLP is used to process and artificial intelligence.

query result:

('Natural language, NLP is used to process and artificial intelligence.', 0.016483516483516484)

query result:

('In particular, right?', 0.014285714285714285)

predict result ('my_text', 1.0)

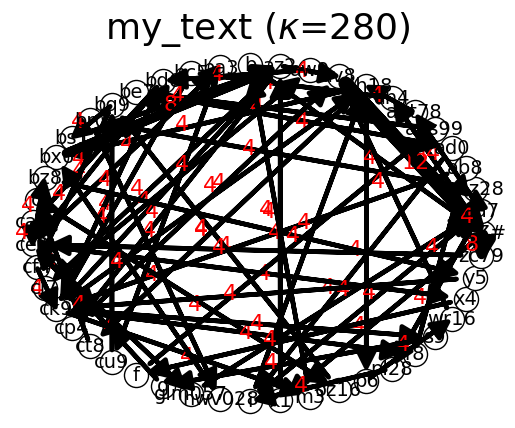

[4]:

tp.show()

[5]:

tp=ift.NLP("new_text")

tp.text=text

# Get paragraphs

paragraphs = tp.get_paragraphs()

print("Paragraphs:", paragraphs)

# Get sentences from the first paragraph

sentences = tp.get_sentences(paragraphs[0])

print("Sentences:", sentences)

# Tokenize the first sentence

tokens = tp.tokenize(sentences[0])

print("Tokens:", tokens)

# Remove stop words

filtered_tokens = tp.remove_stopwords(tokens)

print("Filtered Tokens:", filtered_tokens)

# Get bag of words

bow = tp.bag_of_words(filtered_tokens)

print("Bag of Words:", bow)

# Generate bigrams

bigrams = tp.ngrams(filtered_tokens, n=2)

print("Bigrams:", bigrams)

# Additional methods

avg_sentence_length = tp.average_sentence_length()

print("Average Sentence Length:", avg_sentence_length)

vocab_size = tp.vocabulary_size

print("Vocabulary Size:", vocab_size)

Paragraphs: ["Natural language processing (NLP) is a subfield of linguistics, computer science, and artificial intelligence.\nIt is concerned with the interactions between computers and human language. It can't be true human language, but it is closed enough, right?", 'In particular, NLP is used to program computers to process and analyze large amounts of natural language data.']

Sentences: ['Natural language processing (NLP) is a subfield of linguistics, computer science, and artificial intelligence.', 'It is concerned with the interactions between computers and human language.', "It can't be true human language, but it is closed enough, right?"]

Tokens: ['Natural', 'language', 'processing', '(', 'NLP', ')', 'is', 'a', 'subfield', 'of', 'linguistics', ',', 'computer', 'science', ',', 'and', 'artificial', 'intelligence', '.']

Filtered Tokens: ['Natural', 'language', 'processing', '(', 'NLP', ')', 'subfield', 'linguistics', ',', 'computer', 'science', ',', 'artificial', 'intelligence', '.']

Bag of Words: {'Natural': 1, 'language': 1, 'processing': 1, '(': 1, 'NLP': 1, ')': 1, 'subfield': 1, 'linguistics': 1, ',': 2, 'computer': 1, 'science': 1, 'artificial': 1, 'intelligence': 1, '.': 1}

Bigrams: [('Natural', 'language'), ('language', 'processing'), ('processing', '('), ('(', 'NLP'), ('NLP', ')'), (')', 'subfield'), ('subfield', 'linguistics'), ('linguistics', ','), (',', 'computer'), ('computer', 'science'), ('science', ','), (',', 'artificial'), ('artificial', 'intelligence'), ('intelligence', '.')]

Average Sentence Length: 16.5

Vocabulary Size: 0

[6]:

# start new object

tp=ift.NLP("entity and intent recognition")

text = "John Doe sent an email to jane.doe@example.com on January 5th."

entities = tp.entity_recognition(text)

print(entities)

# Predict intent and entities

input_text = "Book a flight to New York on September 21st at 5:00 PM"

recognition = tp.recognize(input_text)

print("Input Text:", input_text)

print("Predicted Intent:", recognition['intent'])

print("Identified Entities:", recognition['entities'])

# Test data for evaluation

test_data = [

{"text": "Book a flight to Paris", "intent": "book_flight"},

{"text": "What's the weather like today?", "intent": "weather_query"},

{"text": "Hi there!", "intent": "greeting"},

{"text": "Order a pizza for delivery", "intent": "order_food"}

]

# Evaluate the model

metrics = tp.evaluate(test_data)

print("Evaluation Metrics:", metrics)

sentence = "John reads books."

parsed = tp.parse_sentence(sentence)

print("Parsed Sentence:", parsed)

# Add a new intent

tp.add_intent('schedule_meeting', ['schedule', 'meeting', 'appointment'])

# Add a new entity pattern

tp.add_entity_pattern('Email', r'\b[\w.-]+@[\w.-]+\.\w{2,4}\b', "Matches email addresses")

# Update model with new training data

new_data = [

{"text": "Schedule a meeting with Alice", "intent": "schedule_meeting"},

{"text": "I have an appointment at 3 PM", "intent": "schedule_meeting"}

]

tp.update_model(new_data)

# Save patterns

tp.save_intent()

# Predict intent and entities

input_text = "Schedule a meeting with Bob on October 10th"

recognition = tp.recognize(input_text)

print("Input Text:", input_text)

print("Predicted Intent:", recognition['intent'])

print("Identified Entities:", recognition['entities'])

# Parse a sentence

parsed = tp.parse_sentence("Alice sends an email.")

print("Parsed Sentence:", parsed)

[{'entity': '@', 'type': 'EmotionEmoji'}, {'entity': 'D', 'type': 'EmotionEmoji'}, {'entity': 'jane.doe', 'type': 'DockerImageTag'}, {'entity': '5th', 'type': 'DockerImageTag'}, {'entity': 'o', 'type': 'EmotionEmoji'}, {'entity': 'm', 'type': 'EmotionEmoji'}, {'entity': 'c', 'type': 'EmotionEmoji'}, {'entity': 'p', 'type': 'EmotionEmoji'}, {'entity': 'h', 'type': 'EmotionEmoji'}, {'entity': 'e', 'type': 'EmotionEmoji'}, {'entity': 'on', 'type': 'DockerImageTag'}, {'entity': 'John', 'type': 'FirstName'}, {'entity': 'J', 'type': 'EmotionEmoji'}, {'entity': 'l', 'type': 'EmotionEmoji'}, {'entity': 'y', 'type': 'EmotionEmoji'}, {'entity': 'Doe sent', 'type': 'ScientificName'}, {'entity': 'an', 'type': 'DockerImageTag'}, {'entity': 'John Doe', 'type': 'Person'}, {'entity': 'd', 'type': 'EmotionEmoji'}, {'entity': 'John Doe', 'type': 'Location'}, {'entity': 'January', 'type': 'Person'}, {'entity': '5th', 'type': 'OrdinalNumber'}, {'entity': 'u', 'type': 'EmotionEmoji'}, {'entity': '5', 'type': 'EmotionEmoji'}, {'entity': 'January', 'type': 'Month'}, {'entity': 'example.com', 'type': 'URL'}, {'entity': 'i', 'type': 'EmotionEmoji'}, {'entity': 't', 'type': 'EmotionEmoji'}, {'entity': 'r', 'type': 'EmotionEmoji'}, {'entity': 'x', 'type': 'EmotionEmoji'}, {'entity': '@example.com', 'type': 'EmailDomain'}, {'entity': 'example.com', 'type': 'DockerImageTag'}, {'entity': 'email', 'type': 'DockerImageTag'}, {'entity': 'January', 'type': 'Location'}, {'entity': 'a', 'type': 'EmotionEmoji'}, {'entity': 'sent', 'type': 'DockerImageTag'}, {'entity': 'j', 'type': 'EmotionEmoji'}, {'entity': 'jane.doe@example.com', 'type': 'Email'}, {'entity': 'to', 'type': 'DockerImageTag'}, {'entity': 'n', 'type': 'EmotionEmoji'}, {'entity': 's', 'type': 'EmotionEmoji'}, {'entity': 'jane.doe', 'type': 'URL'}]

Input Text: Book a flight to New York on September 21st at 5:00 PM

Predicted Intent: schedule_meeting

Identified Entities: [{'entity': 'at 5:00 PM', 'type': 'TimeExpressionSpecific'}, {'entity': 'September', 'type': 'Month'}, {'entity': 'flight', 'type': 'DockerImageTag'}, {'entity': 'September', 'type': 'Location'}, {'entity': 'o', 'type': 'EmotionEmoji'}, {'entity': 'Y', 'type': 'EmotionEmoji'}, {'entity': 'm', 'type': 'EmotionEmoji'}, {'entity': 's', 'type': 'EmotionEmoji'}, {'entity': '2', 'type': 'EmotionEmoji'}, {'entity': 'a', 'type': 'DockerImageTag'}, {'entity': '5:00 PM', 'type': 'Time'}, {'entity': 'h', 'type': 'EmotionEmoji'}, {'entity': 'e', 'type': 'EmotionEmoji'}, {'entity': 'on', 'type': 'DockerImageTag'}, {'entity': 'w', 'type': 'EmotionEmoji'}, {'entity': 'York on', 'type': 'ScientificName'}, {'entity': 'l', 'type': 'EmotionEmoji'}, {'entity': '21st', 'type': 'OrdinalNumber'}, {'entity': '5', 'type': 'ComputerPortNumber'}, {'entity': 'PM', 'type': 'ChemicalFormula'}, {'entity': '00', 'type': 'DockerImageTag'}, {'entity': 'Book', 'type': 'Person'}, {'entity': 'Book a', 'type': 'ScientificName'}, {'entity': 'New York', 'type': 'Person'}, {'entity': 'b', 'type': 'EmotionEmoji'}, {'entity': 'Book', 'type': 'Location'}, {'entity': '5', 'type': 'DockerImageTag'}, {'entity': 'P', 'type': 'EmotionEmoji'}, {'entity': '5', 'type': 'EmotionEmoji'}, {'entity': 'New York', 'type': 'Location'}, {'entity': ':', 'type': 'EmotionEmoji'}, {'entity': 'S', 'type': 'EmotionEmoji'}, {'entity': 'i', 'type': 'EmotionEmoji'}, {'entity': 'N', 'type': 'EmotionEmoji'}, {'entity': '5:00 PM', 'type': 'Time12Hour'}, {'entity': 't', 'type': 'EmotionEmoji'}, {'entity': 'r', 'type': 'EmotionEmoji'}, {'entity': '21st', 'type': 'DockerImageTag'}, {'entity': 'at', 'type': 'DockerImageTag'}, {'entity': 'k', 'type': 'EmotionEmoji'}, {'entity': 'a', 'type': 'EmotionEmoji'}, {'entity': 'g', 'type': 'EmotionEmoji'}, {'entity': '1', 'type': 'EmotionEmoji'}, {'entity': '00', 'type': 'ComputerPortNumber'}, {'entity': 'f', 'type': 'EmotionEmoji'}, {'entity': 'M', 'type': 'EmotionEmoji'}, {'entity': '0', 'type': 'EmotionEmoji'}, {'entity': 'to', 'type': 'DockerImageTag'}, {'entity': 'September', 'type': 'Person'}, {'entity': 'n', 'type': 'EmotionEmoji'}, {'entity': 'p', 'type': 'EmotionEmoji'}, {'entity': 'PM', 'type': 'StockTicker'}, {'entity': 'B', 'type': 'EmotionEmoji'}]

Evaluation Metrics: {'accuracy': 1.0}

Parsed Sentence: {'subject': ['John', 'reads', 'books', '.']}

Intents saved successfully.

Entity patterns saved successfully.

Intents saved successfully.

Intents saved successfully.

Input Text: Schedule a meeting with Bob on October 10th

Predicted Intent: schedule_meeting

Identified Entities: [{'entity': 'with', 'type': 'DockerImageTag'}, {'entity': 'Schedule a', 'type': 'ScientificName'}, {'entity': 'O', 'type': 'EmotionEmoji'}, {'entity': 'o', 'type': 'EmotionEmoji'}, {'entity': 'm', 'type': 'EmotionEmoji'}, {'entity': 'c', 'type': 'EmotionEmoji'}, {'entity': 'a', 'type': 'DockerImageTag'}, {'entity': 'h', 'type': 'EmotionEmoji'}, {'entity': 'e', 'type': 'EmotionEmoji'}, {'entity': '10th', 'type': 'OrdinalNumber'}, {'entity': 'on', 'type': 'DockerImageTag'}, {'entity': 'w', 'type': 'EmotionEmoji'}, {'entity': 'l', 'type': 'EmotionEmoji'}, {'entity': 'meeting', 'type': 'DockerImageTag'}, {'entity': 'Bob', 'type': 'Person'}, {'entity': 'b', 'type': 'EmotionEmoji'}, {'entity': 'd', 'type': 'EmotionEmoji'}, {'entity': 'Schedule', 'type': 'Person'}, {'entity': 'Bob', 'type': 'Location'}, {'entity': '10th', 'type': 'DockerImageTag'}, {'entity': 'u', 'type': 'EmotionEmoji'}, {'entity': 'S', 'type': 'EmotionEmoji'}, {'entity': 'i', 'type': 'EmotionEmoji'}, {'entity': 'Schedule', 'type': 'Location'}, {'entity': 't', 'type': 'EmotionEmoji'}, {'entity': 'r', 'type': 'EmotionEmoji'}, {'entity': 'Bob on', 'type': 'ScientificName'}, {'entity': 'a', 'type': 'EmotionEmoji'}, {'entity': 'g', 'type': 'EmotionEmoji'}, {'entity': '1', 'type': 'EmotionEmoji'}, {'entity': 'October', 'type': 'Person'}, {'entity': '0', 'type': 'EmotionEmoji'}, {'entity': 'October', 'type': 'Month'}, {'entity': 'n', 'type': 'EmotionEmoji'}, {'entity': 'October', 'type': 'Location'}, {'entity': 'B', 'type': 'EmotionEmoji'}]

Parsed Sentence: {'subject': ['Alice', 'sends', 'email', '.']}