<

Previous

|

Next

|

Contents

>

Read this tutorial comfortably off-line. Click here to purchase the complete E-book of this tutorial

Agent, States, Action

Ladies and gentlemen, now is the time to introduce our superstar agent?.

Imagine our agent as a dumb virtual robot that can learn through experience. The agent can pass one room to another but has no knowledge of the environment. It does not know which sequence of doors the agent must pass to go outside the building.

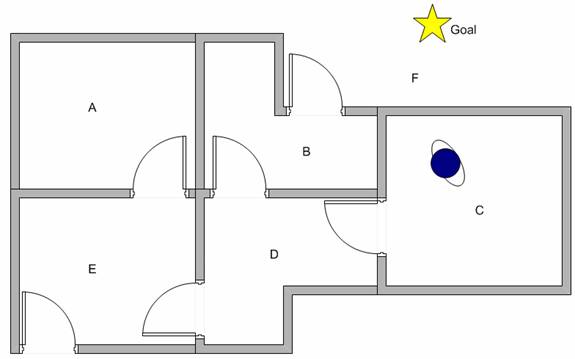

Suppose we want to model some kind of simple evacuation of an agent from any room in the building. Now suppose we have an agent in Room C and we want the agent to learn to reach outside the house (F). (see diagram below)

How to make our agent learn from experience?

Before we discuss about how the agent will learn (using Q learning) in the next section , let us discuss about some terminologies of state and action .

We call each room (including outside the building) as a state . Agent's movement from one room to another room is called action . Let us draw back our state diagram. State is depicted using node in the state diagram, while action is represented by the arrow.

Suppose now the agent is in state C. From state C, the agent can go to state D because the state C is connected to D. From state C, however, the agent cannot directly go to state B because there is no direct door connecting room B and C (thus, no arrow). From state D, the agent can go either to state B or state E or back to state C (look at the arrow out of state D). If the agent is in state E, then three possible actions are to go to state A, or state F or state D. If agent is state B, it can go either to state F or state D. From state A, it can only go back to state E.

We can put the state diagram and the instant reward values into the following reward table, or matrix R .

| Action to go to state | ||||||

| Agent now in state | A | B | C | D | E | F |

|

A |

- |

- |

- |

- |

0 |

- |

|

B |

- |

- |

- |

0 |

- |

100 |

|

C |

- |

- |

- |

0 |

- |

- |

|

D |

- |

0 |

0 |

- |

0 |

- |

|

E |

0 |

- |

- |

0 |

- |

100 |

|

F |

- |

0 |

- |

- |

0 |

100 |

The minus sign in the table says that the row state has no action to go to column state. For example, State A cannot go to state B (because no door connecting room A and B, remember?)

Tired of ads? Read it off line on any device. Click here to purchase the complete E-book of this tutorial

<

Previous

|

Next

|

Contents

>

Preferable reference for this tutorial is

Teknomo, Kardi. 2005. Q-Learning by Examples. http://people.revoledu.com/kardi/tutorial/ReinforcementLearning/index.html