Singular Value Decomposition (SVD)

Singular value decomposition (SVD) is a factorization of a rectangular matrix ![]() into three matrices

into three matrices![]() ,

, ![]() and

and![]() . The two matrices

. The two matrices ![]() and

and ![]() are orthogonal matrices (

are orthogonal matrices (![]() ,

,![]() ) while

) while ![]() is a diagonal matrix. The factorization means that we can multiply the three matrices to get back the original matrix

is a diagonal matrix. The factorization means that we can multiply the three matrices to get back the original matrix![]() . The transpose matrix is obtained through

. The transpose matrix is obtained through![]() .

.

Since both orthogonal matrix and diagonal matrix have many nice properties, SVD is one of the most powerful matrix decomposition that used in many applications such as least square (regression), feature selection (PCA, MDS), spectral clustering, image restoration and 3D computer vision (Fundamental matrix estimation), equilibrium of Markov Chain, and many others.

Matrices ![]() and

and ![]() are not unique, their columns come from the concatenation of eigenvectors of symmetric matrices

are not unique, their columns come from the concatenation of eigenvectors of symmetric matrices ![]() and

and![]() . Since eigenvectors of symmetric matrix are orthogonal (and linearly independent), they can be used as basis vectors (coordinate system) to span a multidimensional space. The absolute value of the determinant of orthogonal matrix is one, thus the matrix always has inverse. Furthermore, each column (and each row) of orthogonal matrix has unit norm.

. Since eigenvectors of symmetric matrix are orthogonal (and linearly independent), they can be used as basis vectors (coordinate system) to span a multidimensional space. The absolute value of the determinant of orthogonal matrix is one, thus the matrix always has inverse. Furthermore, each column (and each row) of orthogonal matrix has unit norm.

The diagonal matrix ![]() contains the square of eigenvalues of symmetric matrix

contains the square of eigenvalues of symmetric matrix![]() . The diagonal elements are non-negative numbers and they are called singular values. Because they come from a symmetric matrix, the eigenvalues (and the eigenvectors) are all real numbers (no complex numbers).

. The diagonal elements are non-negative numbers and they are called singular values. Because they come from a symmetric matrix, the eigenvalues (and the eigenvectors) are all real numbers (no complex numbers).

Numerical computation of SVD is stable in term of round off error. When some of the singular values are nearly zero, we can truncate them as zero and it yields numerical stability.

Example:

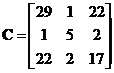

Find SVD of matrix![]() .

.

Solution:

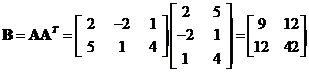

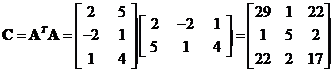

First, we multiply the matrix ![]() by its transpose to produce symmetric matrices

by its transpose to produce symmetric matrices and

and .

.

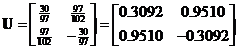

Then we find the eigenvalues and eigenvectors of the symmetric matrices. For matrix![]() , the eigenvalues are

, the eigenvalues are ![]() and

and![]() . The corresponding eigenvectors are

. The corresponding eigenvectors are and

and  respectively. Concatenating the eigenvectors produces matrix

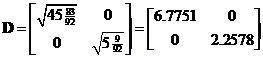

respectively. Concatenating the eigenvectors produces matrix . The diagonal matrix can be obtained from the square root of the eigenvalues

. The diagonal matrix can be obtained from the square root of the eigenvalues .

.

For matrix , the eigenvalues are

, the eigenvalues are ![]() and

and![]() . The third eigenvalue is zero as expected because the eigenvalues of

. The third eigenvalue is zero as expected because the eigenvalues of ![]() are exactly the same as the eigenvalues of matrix

are exactly the same as the eigenvalues of matrix![]() . The corresponding eigenvectors are

. The corresponding eigenvectors are ,

,  and

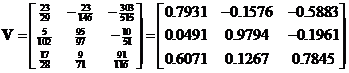

and  respectively. Concatenating the eigenvectors produces matrix

respectively. Concatenating the eigenvectors produces matrix . Since the Singular Value Decomposition factor matrix

. Since the Singular Value Decomposition factor matrix![]() , the diagonal matrix can also be obtained from

, the diagonal matrix can also be obtained from![]() .

.

Remember, the eigenvectors are actually the many solutions of homogeneous equation. They are not unique and correct up to a scalar multiple. Thus, you can multiply an eigenvector with -1 and will still get the same correct result.

The interactive program below produces the factorization of a rectangular matrix using Singular Value Decomposition (SVD). You can also truncate the results by setting lower singular values to zero. This feature is useful for feature selection (such as PCA and MDS). Random example button will generate random rectangular matrix. Try to experiment with your own input matrix.

Yes, this program is a free educational program!! Please don't forget to tell your friends and teacher about this awesome program!

Properties

In one strike, Singular Value Decomposition (SVD) can reveal many things:

- Singular values give valuable information whether a square matrix

is singular. A square matrix

is singular. A square matrix  is non-singular (i.e. have inverse) if and only if all its singular values are different from zero.

is non-singular (i.e. have inverse) if and only if all its singular values are different from zero. - If the square matrix

is nonsingular, the inverse matrix can be obtained by

is nonsingular, the inverse matrix can be obtained by .

. - The number of non-zero singular values is equal to the rank of any rectangular matrix. In fact, SVD is a robust technique to compute matrix rank against ill-conditioned matrices.

- The ratio between the largest and the smallest singular value is called condition number, measures the degree of singularity and to reveal ill-condition matrix.

- SVD can produce one of matrix norms, which is called Frobenious norm by taking the sum of square of singular values

. The Frobenious norm is computed by taking the sums of the square elements in the matrix.

. The Frobenious norm is computed by taking the sums of the square elements in the matrix. - SVD can also produce generalized inverse (pseudo inverse) for any rectangular matrix. In fact, the generalized inverse is also a Moore-Penrose inverse by setting

. Matrix

. Matrix  is equal to

is equal to  but all nearly zero values are set to zero.

but all nearly zero values are set to zero. - SVD also approximate the solution of non-homonegeous linear system

such that the norm is minimum

such that the norm is minimum . This is the basis of least square, orthogonal projection and regression analysis.

. This is the basis of least square, orthogonal projection and regression analysis. - SVD also solve homogeneous linear system by taking the column of

which represent the eigenvector corresponding to the only zero eigenvalue of symmetric matrix

which represent the eigenvector corresponding to the only zero eigenvalue of symmetric matrix .

.

See also: Matrix Eigen Value & Eigen Vector for Symmetric Matrix, Similarity and Matrix Diagonalization, Symmetric Matrix, Spectral Decomposition

Rate this tutorial or give your comments about this tutorial

Preferable reference for this tutorial is

Teknomo, Kardi (2011) Linear Algebra tutorial. https:\\people.revoledu.com\kardi\tutorial\LinearAlgebra\